Microsoft's approach is to view cloud applications as software plus service. In this model, the cloud is another platform and applications can run locally and access cloud services or run entirely in the cloud and be accessed by browsers using standard Service Oriented Architecture (SOA) protocols.

Microsoft calls their cloud operating system the Windows Azure Platform. You can think of Azure as a combination of virtualized infrastructure to which the .NET Framework has been added as a set of .NET Services. The Windows Azure service itself is a hosted environment of virtual machines enabled by a fabric called Windows Azure AppFabric. You can host your application on Azure and provision it with storage, growing it as you need it. Windows Azure service is an Infrastructure as a Service offering.

A number of services interoperate with Windows Azure, including SQL Azure (a version of SQL

Server), SharePoint Services, Azure Dynamic CRM, and many of Windows Live Services comprising what is the Windows Azure Platform, which is a Platform as a Service cloud computing model.

Server), SharePoint Services, Azure Dynamic CRM, and many of Windows Live Services comprising what is the Windows Azure Platform, which is a Platform as a Service cloud computing model.

Windows Live Services is a collection of applications and services that run on the Web. Some of these applications called Windows Live Essentials are add-ons to Windows and downloadable as applications. Other Windows Live Services are standalone Web applications viewable in a browser.

Exploring Microsoft Cloud Services

Microsoft Live is only one part of the Microsoft cloud strategy. The second part of the strategy is the extension of the .NET Framework and related development tools to the cloud. To enable .NET developers to extend their applications into the cloud, or to build .NET style applications that run completely in the cloud, Microsoft has created a set of .NET services, which it now refers to as the Windows Azure Platform.

Azure is a virtualized infrastructure to which a set of additional enterprise services has been layered on top, including:

• A virtualization service called Azure AppFabric that creates an application hosting environment. AppFabric (formerly .NET Services) is a cloud-enabled version of the .NET Framework.

• A high capacity non-relational storage facility called Storage.

• A set of virtual machine instances called Compute.

• A cloud-enabled version of SQL Server called SQL Azure Database.

• A database marketplace based on SQL Azure Database code-named “Dallas.”

• An xRM (Anything Relations Management) service called Dynamics CRM based on Microsoft

Dynamics.

• A document and collaboration service based on SharePoint called SharePoint Services.

• Windows Live Services, a collection of services that runs on Windows Live, which can be used in applications that run in the Azure cloud.

• A virtualization service called Azure AppFabric that creates an application hosting environment. AppFabric (formerly .NET Services) is a cloud-enabled version of the .NET Framework.

• A high capacity non-relational storage facility called Storage.

• A set of virtual machine instances called Compute.

• A cloud-enabled version of SQL Server called SQL Azure Database.

• A database marketplace based on SQL Azure Database code-named “Dallas.”

• An xRM (Anything Relations Management) service called Dynamics CRM based on Microsoft

Dynamics.

• A document and collaboration service based on SharePoint called SharePoint Services.

• Windows Live Services, a collection of services that runs on Windows Live, which can be used in applications that run in the Azure cloud.

Defining the Windows Azure Platform

Azure is Microsoft's Infrastructure as a Service (IaaS) Web hosting service. Compared to Amazon's and Google's cloud services, Azure (the service) is a competitor to AWS. Windows Azure Platform is a competitor to Google's App Engine.

The software plus services approach

Microsoft has a very different vision for cloud services than either Amazon or Google does. In Amazon's case, AWS is a pure infrastructure play. AWS essentially rents you a (virtual) computer on which to run your application. An Amazon Machine Image can be provisioned with an operating system, an enterprise application, or application stack, but that provisioning is not a prerequisite. An AMI is your machine, and you can configure it as you choose. AWS is a deployment enabler.

Google's approach with its Google App Engine (GAE) is to offer a cloud-based development platform on which you can add your program, provided that the program speaks the Google App Engine API and uses objects and properties from the App Engine framework. Google makes it possible to program in a number of languages, but you must write your applications to conform to Google's infrastructure. Google Apps lets you create a saleable cloud-based application, but that application can only work within the Google infrastructure, and the application is not easily ported to other environments.

Microsoft sees the cloud as being a complimentary platform to its other platforms. The company envisages a scenario where a Microsoft developer with an investment in an application wants to extend that application's availability to the cloud. Perhaps the application runs on a server, desktop, or mobile device running some form of Windows. Microsoft calls this approach software plus services.

The Windows Azure Platform allows a developer to modify his application so it can run in the cloud on virtual machines hosted in Microsoft datacenters. Windows Azure serves as a cloud operating system, and the suitably modified application can be hosted on Azure as a runtime application where it can make use of the various Azure Services. Additionally, local applications running on a server, desktop, or mobile device can access Windows Azure Services through the Windows Services Platform API.

The Azure Platform

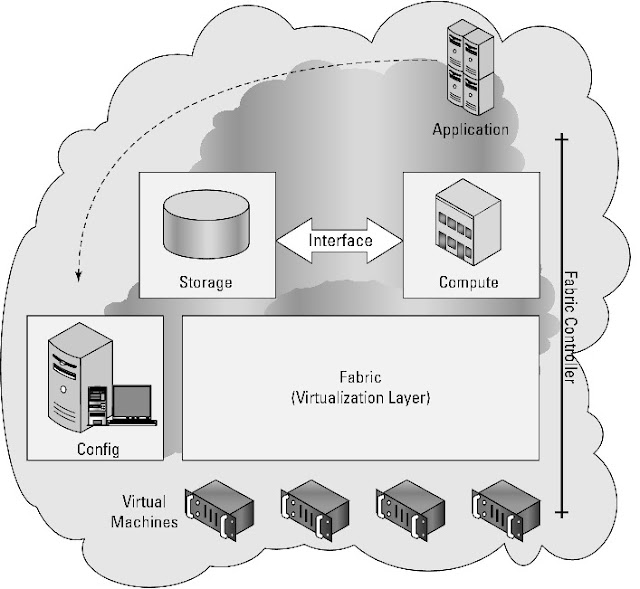

With Azure's architecture (shown in Figure 10.4), an application can run locally, run in the cloud, or some combination of both. Applications on Azure can be run as applications, as background processes or services, or as both.

The Azure Windows Services Platform API uses the industry standard REST, HTTP, and XML

protocols that are part of any Service Oriented Architecture cloud infrastructure to allow applications to talk to Azure. Developers can install a client-side managed class library that contains functions that can make calls to the Azure Windows Services Platform API as part of their applications. These API functions have been added to Microsoft Visual Studio as part of Microsoft's Integrated Development Environment (IDE).

protocols that are part of any Service Oriented Architecture cloud infrastructure to allow applications to talk to Azure. Developers can install a client-side managed class library that contains functions that can make calls to the Azure Windows Services Platform API as part of their applications. These API functions have been added to Microsoft Visual Studio as part of Microsoft's Integrated Development Environment (IDE).

The Azure Service Platform hosts runtime versions of .NET Framework applications written in any of the languages in common use, such as Visual Basic, C++, C#, Java, and any application that has been compiled for .NET's Common Language Runtime (CLR). Azure also can deploy Web-based applications built with ASP.NET, the Windows Communication Foundation (WCF), and PHP, and it supports Microsoft's automated deployment technologies. Microsoft also has released SDKs for both Java and Ruby to allow applications written in those languages to place calls to the Azure Service Platform API to the AppFabric Service.

The Windows Azure service

Windows Azure is a virtualized Windows infrastructure run by Microsoft on a set of datacenters around the world.

Six main elements are part of Windows Azure:

- Application: This is the runtime of the application that is running in the cloud.

- Compute: This is the load-balanced Windows server computation and policy engine that allows you to create and manage virtual machines that serve either in a Web role and a Worker role.

A Web role is a virtual machine instance running Microsoft IIS Web server that can accept and

respond to HTTP or HTTPS requests. A Worker role can accept and respond to requests, but doesn't run IIS in that virtual machine. Worker roles can communicate with Azure Storage or through direct connections to clients.

respond to HTTP or HTTPS requests. A Worker role can accept and respond to requests, but doesn't run IIS in that virtual machine. Worker roles can communicate with Azure Storage or through direct connections to clients.

- Storage: This is a non-relational storage system for large-scale storage.

Azure Storage Service lets you create drives, manage queues, and store BLOBs (Binary Large

Objects). You manipulate content in Azure Storage using the REST API, which is based on

standard HTTP requests and is therefore platform-independent. Stored data can be read using GETs, written with PUTs, modified with POSTs, and removed with DELETE requests. Azure Storage plays the same role in Azure that Amazon Simple Storage Service (S3) plays in

Amazon Web Services. For relational database services, SQL Azure may be used.

Objects). You manipulate content in Azure Storage using the REST API, which is based on

standard HTTP requests and is therefore platform-independent. Stored data can be read using GETs, written with PUTs, modified with POSTs, and removed with DELETE requests. Azure Storage plays the same role in Azure that Amazon Simple Storage Service (S3) plays in

Amazon Web Services. For relational database services, SQL Azure may be used.

- Fabric: This is the Windows Azure Hypervisor, which is a version of Hyper-V that runs on Windows Server 2008.

- Config: This is a management service.

- Virtual machines: These are instances of Windows that run the applications and services that are part of a particular deployment.

The Windows Azure Platform extends applications running on other platforms to the cloud using Microsoft infrastructure and a set of enterprise services.

Windows Azure is a virtualized infrastructure that provides configurable virtual machines, independent storage, and a configuration interface. The portion of the Azure environment that creates and manages a virtual resource pool is called the Fabric Controller. Applications that run on Azure are memory-managed, load-balanced, replicated, and backed up through snapshots automatically by the Fabric Controller.

Windows Azure AppFabric

Windows Azure AppFabric provides a

comprehensive cloud middleware platform for developing, deploying and

managing applications on the Windows Azure Platform. It delivers

additional developer productivity, adding in higher-level

Platform-as-a-Service (PaaS) capabilities on top of the familiar

Windows Azure application model. It also enables bridging your

existing applications to the cloud through secure connectivity across

network and geographic boundaries, and by providing a consistent

development model for both Windows Azure and Windows Server.

Finally, it makes development more productive by providing a

higher abstraction for building end-to-end applications, and

simplifies management and maintenance of the application as it takes

advantage of advances in the underlying hardware and software

infrastructure.

Middleware

Services: platform capabilities as services, which raise the

level of abstraction and reduce complexity of cloud development.

Composite

Applications: a set of new innovative frameworks, tools and

composition engine to easily assemble, deploy, and manage a composite

application as a single logical entity

Scale-out application

infrastructure: optimized for cloud-scale services and mid-tier

components.

Azure Content Delivery Network

The Windows Azure Content Delivery Network (CDN) is a worldwide content caching and delivery system for Windows Azure blob content. Currently, more than 18 Microsoft datacenters are hosting this service in Australia, Asia, Europe, South America, and the United States, referred to as endpoints. CDN is an edge network service that lowers latency and maximizes bandwidth by delivering content to users who are nearby.

SQL Azure

SQL Azure is a cloud-based relational database service that is based on Microsoft SQL Server. Initially, this service was called SQL Server Data Service. An application that uses SQL Azure Database can run locally on a server, PC, or mobile device, in a datacenter, or on Windows Azure. Data stored in an SQL Azure database is accessed using the Tabular Data Stream (TDS) protocol, the same protocol used for a local SQL Server database. SQL Azure Database supports Transact-SQL statements.

Note: Because SQL Azure is managed in the cloud, there are no administrative controls over the SQL engine. You can't shut the system down, nor can you directly interact with the SQL Servers.

Windows Live Essentials

Windows Live Essentials applications are a collection of client-side applications that must be

downloaded and installed on a desktop. Some of these applications were once part of Windows and have been unbundled from the operating system; others are entirely new. Live Essentials rely on cloud-based services for their data storage and retrieval, and in some cases for their processing.

downloaded and installed on a desktop. Some of these applications were once part of Windows and have been unbundled from the operating system; others are entirely new. Live Essentials rely on cloud-based services for their data storage and retrieval, and in some cases for their processing.

Windows Live Essentials currently includes the following:

• Family Safety

• Windows Live Messenger

• Photo Gallery

• Mail

• Movie Maker

• Family Safety

• Windows Live Messenger

• Photo Gallery

• Movie Maker